What is PowerShell Copy-Item Cmdlet?

Copy-Item cmdlet in Powershell is primarily used to copy files from source to destinataion. In terms of numbers of files, they could be individual or multiple along with their content. It can also work across different file systems such as local, network shares etc,

Basic Syntax and Parameters

The basic syntax for the PowerShell Copy-Item cmdlet is as follows:

Copy-Item -Path <source> -Destination <destination> [<parameters>]

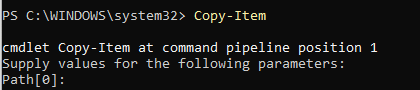

If you type the cmdlet only, PowerShell will prompt you for the Source, as shown below:

The first mandatory parameter is the source path. This item path parameter specifies the path to the item or items to be copied. The source can be a file or folder path. Multiple paths can be specified using a comma-separated list, and the path can contain wildcards. A best practice would be to supply a destination path string to specify the path to which the items should be copied. If copying a single item, the path can include a new name for the copied item if desired.

Below is a list of other parameters for the Copy-Item PowerShell cmdlet:

- -Recurse: Copies subdirectories and their content recursively, which means that the Copy-Item cmdlet is applied to the top-level folder and all levels of subfolders beneath it.

- -Force parameter: Overwrites existing read-only files and creates destination directories if they do not exist.

- -Container: Preserves container objects during copy operations, which is useful when copying directory structures.

- -Filter: Specifies a filter string to qualify the path parameter, such as specifying a particular file extension

- -Include: Specifies items to copy and supports wildcards. Only items that match both Path and Include parameters are copied

- -Exclude: Specifies items to omit from copying, supports wildcards

- -Credential: Specifies the user account credentials to use for the operation

- -WhatIf: Shows what would happen if the command ran without actually executing it

- -Confirm: Prompts for confirmation before executing the command

- -FromSession: Specifies the PSSession object from which to get a remote file.

- -ToSession: Specifies the PSSession object to which to send a remote file

Common Use Cases

For most examples, we will copy files to multiple locations on the local computer. There are, however, a variety of common use cases for the Copy-Item cmdlet, including:

- Creating backups of important files and folders

- Distributing files or simple applications across multiple machines

- Migrating large amounts of files between servers or storage locations

- Developers can create snapshots of project folders at different stages of development.

- Distribute configuration files across multiple servers

Now let’s compare the Copy-Item cmdlet with other file management cmdlets:

Copy-Item vs. Move-Item:

- Copy-Item creates a duplicate, leaving the original intact

- Move-Item relocates the file/folder, removing it from the original location

Copy-Item vs. Get-ChildItem

- Copy-Item performs the action of copying

- Get-ChildItem lists files and folders and is often used in conjunction with the Copy-Item

Note that the Copy-Item cmdlet is recommended for basic file copying. Those seeking more advanced features for large file transfers or mirroring may consider Microsoft Robocopy.

Copying Files with Copy-Item PowerShell Cmdlet

Basic File Copy Example

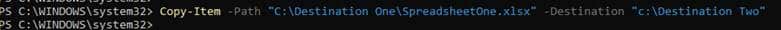

In this example, we want to copy a spreadsheet from one folder to another using the PowerShell copy item command.

Copy-Item -Path "C:\Destination One\SpreadsheetOne.xlsx" -Destination "c:\Destination Two"

Here is what it looks like in PowerShell:

Copying Multiple Files

Now, let’s copy more than one file at a time. Note that the file paths use different drives. Remember that if the destination directory doesn’t exist, PowerShell will create it.

Copy-Item -Path "C:\source\file1.txt","C:\Source\file2.txt" -Destination "D:\Destination Two"

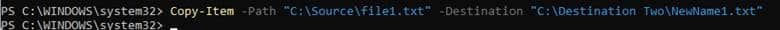

Copying Files with Renaming

You can copy a file to a new folder and rename it in the process using the below command:

Copy-Item -Path "C:\Source\file1.txt" -Destination "C:\Destination Two\NewName1.txt"

Here is what it looks like in PowerShell:

Copying Folders with PowerShell

The copying ability of PowerShell isn’t just limited to files – you can also copy a folder using the Copy-Item cmdlet to new locations. Below, we show you how to use the PowerShell copy folder and contents command.

Basic Folder Copy Example

Use the PowerShell copy folder command below to copy a folder to a different location. This PowerShell copy command will copy all files in the “source” folder and place them in the destination folder.

Copy-Item -Path "C:\source\folder" -Destination "D:\destination"

Copying Folders with Subfolders

What if the source folder had multiple subdirectories within it? Using the basic command above, anything within the subfolders would have been ignored. If you want to copy everything in the source folder, including the contents of subdirectories, you need to enact a PowerShell copy folder and subfolders command using the Recurse parameter.

Copy-Item -Path "C:\source\folder" -Destination "D:\destination" -Recurse

Copying and Merging Multiple Folders

Let’s say you want to copy the files of two source folders and merge them into a single destination folder. That is easily accomplished using the PowerShell copy contents of folder to another folder command. Here, two source paths are separated by a comma. They are copied and then merged into the destination folder.

Copy-Item -Path "C:\source1\*","C:\source2\*" -Destination "D:\destination"

Advanced File and Folder Copy Techniques

Using Wildcards in File Paths

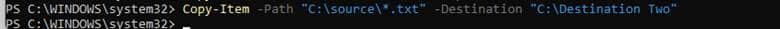

Wildcards allow you to copy multiple files or folders that match a specific pattern without listing each item individually. They are ideal for dealing with large numbers of files or folders, as using wildcards can significantly reduce the amount of code you need. In the example below, the wildcard is used for any .txt file:

Copy-Item -Path "C:\source\*.txt" -Destination "C:\Destination Two"

Here’s what it looks like in PowerShell:

Filtering Files with Include and Exclude Parameters

There will be times when you want to exclude specific files within a folder for copying. You may want to exclude or only include certain file types or file versions or not include specific files that contain sensitive information. As such, you’ll likely want to exclude temporary or unnecessary files during a backup or migration process.

In the example below, we are copying and specifying that all log files should be considered for copying; however, any log file that also has a .tmp extension will be excluded.

Copy-Item -Path "C:\source\*" -Destination "D:\destination" -Include "*.log" -Exclude "*.tmp"

Using the Force Parameter to Overwrite Files

By default, Copy-Item will overwrite existing files at the destination without prompting. Sometimes, you might encounter a situation where the destination folder contains a read-only file with the same name as the file you’re trying to copy. By default, Copy-Item will not overwrite this read-only file. However, you can override this behavior and force the overwrite using the -Force parameter. Here’s an example of how to use it:

Copy-Item -Path "C:\source\file.txt" -Destination "D:\destination" -Force

Copying Files and Folders to Remote Computers via Copy-Item

Although there are situations where you might need to copy files and folders to different drive locations on a local computer, the true strength of the Copy-Item cmdlet lies in its ability to facilitate the transfer of files and folders between remote computers.

Setting Up a Remote Session with New-PSSession

Let’s say you want to copy some files to a remote computer using PowerShell. While you may have local admin rights, your current account may not have the proper rights to the destination computer. You will also have to know the computername of the remote machine. In this case, you must establish a remote session with the new computer using an alternative credential. You can enact PowerShell copy file to folder using the $session command below.

$session = New-PSSession -ComputerName "RemotePC" -Credential "User"

Copying Files to a Remote Computer

You can now copy files to the remote computer using the established session.

Copy-Item -Path "C:\source\file.txt" -Destination "C:\remote\destination" -ToSession $session

Copying Files from a Remote Computer

Of course, you may want to copy files from a remote computer to your local computer. To do this, use the following command.

Copy-Item -Path "C:\remote\source\file.txt" -Destination "C:\local\destination" -FromSession $session

Recursively Copying Remote Folders

In the previous examples involving remote computers, subfolders, and their contents were not included in the copy operation. To include the entire contents of the source item path, including all subfolders and files, add the -Recurse parameter to the Copy-Item command, just as we did with local copy operations.

Copy-Item -Path "C:\remote\source\folder" -Destination "C:\local\destination" -FromSession $session -Recurse

Error Handling and Verification

Using WhatIf and Confirm Parameters

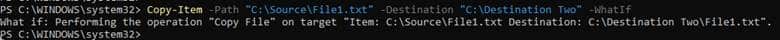

The WhatIf parameter allows you to verify the outcome of a command without making any changes. For instance, as shown below, you might want to confirm that the source and destination paths are correct before executing the copy operation.

You can also check for overwrite behavior, which shows which files will be overwritten at the destination, helping you avoid unintended data loss. WhatIf helps you understand exactly which items will be copied. The command parameter is shown below.

Copy-Item -Path "C:\Source\File1.txt" -Destination "C:\Destination Two" -WhatIf

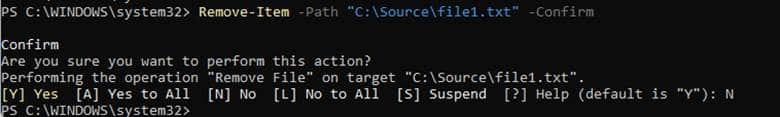

By default, most PowerShell cmdlets make changes to the system without confirmation from the user. There may be instances where you want the user to confirm an action if it is executed. The best example would be if you used PowerShell to remove a file. In the example below, you use the confirm command to prompt the user for confirmation.

Remove-Item -Path C:\file.txt -Confirm

The command will be executed in PowerShell, as shown below:

You can add the -Confirm parameter to any Copy-Item command.

Verifying the Copy Process

When using PowerShell to back up critical files, you may want to verify that the copy process occurred. You can create a simple PowerShell script, such as the one below, to confirm file copying by comparing file counts, sizes, and timestamps.

# Define source and destination paths

$source = "C:\SourceFolder"

$destination = "C:\DestinationFolder"

# Copy the files from source to destination

Copy-Item -Path $source\* -Destination $destination -Recurse

# Confirm the files were copied by checking if they exist in the destination folder

$sourceFiles = Get-ChildItem -Path $source

$allCopied = $true

foreach ($file in $sourceFiles) {

$destinationFile = Join-Path $destination $file.Name

if (Test-Path $destinationFile) {

Write-Host "File '$($file.Name)' was copied successfully."

} else {

Write-Host "File '$($file.Name)' failed to copy."

$allCopied = $false

}

}

if ($allCopied) {

Write-Host "All files copied successfully."

} else {

Write-Host "Some files failed to copy."

}

Handling Errors and Retries

You may want to utilize error handling for large file copying to ensure that your scripts operate correctly and can recover from unexpected failures. In PowerShell, the primary way to handle errors is through try-catch blocks. The script below utilizes the try-catch to handle errors when copying files

# Define source and destination paths

$source = "C:\SourceFolder"

$destination = "C:\DestinationFolder"

# Function to copy files with error handling

function Copy-Files {

try {

# Copy files and set error action to stop failure

Copy-Item -Path $source\* -Destination $destination -Recurse -ErrorAction Stop

Write-Host "Files copied successfully."

}

catch {

# Log the error message

Write-Error "An error occurred while copying files: $($_.Exception.Message)"

# Perform additional actions, like retrying or logging

}

finally {

Write-Host "Copy operation complete."

}

}

# Execute the function

Copy-Files

Practical Examples and Use Cases of Copy-Item Cmdlet

Detailed Examples of Common Tasks

- Copying Files from a Failing Hard Drive: A hard drive shows signs of failure, and critical files need to be copied to a safe location before the drive fails.

- Backup Automation for Daily File Changes: For disaster recovery, a company might need to back up all files in a critical directory to a backup server.

- Migration from an On-Prem Server to Cloud Storage: A company might want to migrate its local server’s documents from local drives to a cloud storage provider such as Azure or AWS.

- Deploying Configuration Files to Multiple Servers: A system administrator wants to deploy updated configuration files from

C:\ConfigUpdatesto a list of remote servers.

Real-World Scenarios and Solutions

A complex file structure can present some challenging instances when using the Copy-Item cmdlet. For example, long file paths can be a problem for older Windows versions that don’t support file paths greater than 260 characters. In those instances, you may want to use UNC paths rather than local file paths.

There may be instances when you need to use the -LiteralPath parameter in the Copy-Item cmdlet. This might be necessary when the path contains special characters (like [, ], or *) that PowerShell might otherwise interpret as wildcard characters or escape sequences.

Permission issues are also a common problem. You can use the -Force parameter to override some restrictions or run PowerShell as an administrator.

Sample Scripts for Automation

It is in your interest to automate as many manual tasks as possible to streamline them and avoid human error. Below is an example of a PowerShell script that is used to back up a folder to a backup directory.

# Define source and destination directories

$sourcePath = "C:\SourceDirectory"

$backupPath = "D:\BackupDirectory"

# Get current timestamp to append to the backup folder

$timestamp = Get-Date -Format "yyyyMMdd_HHmmss"

# Create a new folder for the current backup with timestamp

$backupDestination = "$backupPath\Backup_$timestamp"

New-Item -ItemType Directory -Path $backupDestination

# Copy all files and directories from the source to the backup folder

try {

Copy-Item -Path $sourcePath -Destination $backupDestination -Recurse -Force

Write-Output "Backup completed successfully on $(Get-Date)"

} catch {

Write-Output "An error occurred during the backup: $_"

}

# Optional: Clean up old backups (e.g., delete backups older than 30 days)

$daysToKeep = 30

$oldBackups = Get-ChildItem -Path $backupPath -Directory | Where-Object { $_.CreationTime -lt (Get-Date).AddDays(-$daysToKeep) }

foreach ($backup in $oldBackups) {

try {

Remove-Item -Path $backup.FullName -Recurse -Force

Write-Output "Old backup removed: $($backup.FullName)"

} catch {

Write-Output "An error occurred while deleting old backups: $_"

}

}

Tips and Best Practices

Best Practices for Using Copy-Item

While the Copy-Item cmdlet is straightforward, here are a few best practices that might help you in more complex circumstances.

- Run PowerShell as Administrator

- Use the -Recurse parameter to copy entire directory structures.

- Utilize the -Force parameter judiciously to avoid unintended overwrites

- When copying large directories, consider alternatives such as Robocopy or other tools better suited for large jobs.

- Consider using -WhatIf for testing your commands to see what might happen without making actual changes.

Common Pitfalls and How to Avoid Them

In addition to best practices, you should also keep these common pitfalls in mind when using PowerShell scripting:

- Avoid using incorrect or relative paths that don’t resolve as expected by using full paths

- It is easy to forget about underlying subdirectories, so use the -Recurse parameter to ensure that all files contained in the parent directory are copied.

- Insufficient permission will prevent you from completing copy operations, especially when remote machines are involved. Ensure proper permissions and use elevated privileges when necessary.

- When complex copy operations are not completed correctly, use try-catch blocks and implement proper error logging.

Performance Considerations and Optimizations

Copying substantial data sets can consume much processing power on your computer. Parallel processing is a computing technique that involves breaking down a task into smaller parts and executing them simultaneously across multiple processors or cores. Parallel processing can significantly improve performance when applied to file operations like those performed by the Copy-Item cmdlet. Instead of copying files one at a time sequentially, parallel processing allows multiple files to be copied concurrently, utilizing the total capacity of modern multi-core processors. This approach can dramatically reduce the time required for large copy operations, as it takes advantage of available system resources more efficiently. While the Copy-Item cmdlet doesn’t natively support parallel processing, PowerShell provides mechanisms like ForEach-Object -Parallel that can be used with Copy-Item to achieve parallel file copying. This can potentially provide substantial performance gains in scenarios involving numerous files or complex directory hierarchies. A basic example is shown below:

<$sourcePath = "C:\SourceFolder"

$destPath = "D:\DestinationFolder"

Get-ChildItem $sourcePath | ForEach-Object -Parallel {

Copy-Item $_.FullName -Destination $using:destPath -Force

} -ThrottleLimit 8

Conclusion

The Copy-Item cmdlet command is a versatile tool in PowerShell for managing file and directory operations. It has a variety of parameters to cover multiple situations, such as the ability to specify a copy item path and implement error handling. Whether you're using PowerShell script to copy files from source to destination or to copy files locally or across remote systems, the Copy-Item cmdlet can perform essential copy functions efficiently. Consider implementing parallel processing to enhance performance for large and complex datasets or explore specialized tools designed for handling extensive data transfers. Mastering the Copy-Item cmdlet ensures accurate file transfers, making it an essential component of any PowerShell user's toolkit, while understanding its limitations allows for informed decisions on when to employ alternative methods.